ViewPoint UX Research Assistant Internship

Overview

ViewPoint is an educational software in which participants engage with one another in a simulation of real-world situations such as the creation of legislation, court trials, or crisis management. Over the course of 10 months, I collaborated with a faculty partner and the Center for Academic Innovation at the University of Michigan to discover the needs and behaviors of novice simulation designers to increase adoption of the software and retention of new users.

An example of a research proposal for this project can be found here.

Timeline

Ten months

My Role

UX Researcher

UX Methods

Literature Review, User Interviews, Heuristic Evaluation, Cognitive Task Analysis, Journey Map, Card Sorting, Participatory Design

Summary of Findings

With current onboarding materials proving to be insufficient for inexperienced simulation designers, the ViewPoint team sought to understand how to best support these users as they created their first simulations in the software. However, interviews revealed early assumptions about a possible solution were incorrect.

Early in the research process, my team assumed the best way to help new simulation designers and new users of the ViewPoint software was to create a simulation template library that could be quickly populated with unique content and used. To further explore this idea, I conducted a number of interviews with new users that had completed their first simulation within the previous 3-5 months. Instead I found that these users did not find the idea of a template useful. Key findings during the interviews included:

Need Examples

Novice simulation designers struggled to see how the various elements of the simulation would come together in the end. They reported that, rather than an empty template, they felt they needed completed simulations to reference when building their own.

Not Self-Reliant

The faculty partner that invented ViewPoint in conjunction with the Center for Academic Innovation personally assisted the new users of the software as they created their first simulation. The users interviews reported they’d be unable to complete the process without help.

Decision-Making

Results from the interviews indicated that our assumptions about the way novice simulation designers created simulations-namely, that their decision-making was similar to that of experts-was incorrect.

“I need a real-time cooking video to follow, but most cooking videos are edited. . .I have to go back to that step, again and again, to make sure I didn’t forget anything. AND I have to switch between the clip and my phone frequently. . .in the end, my phone was dirty and my steak was overcooked.”

Introducing Sous

Sous is a hands-free multimodal solution that gives personalized guidance based on visual context and user’s progress.

Sous is a two-part solution: Sous’s camera is a peripheral device used to “see” the current cooking environment and relay that information back to a cloud service for processing and delivery of feedback to the user via connected device. Users communicate with Sous exclusively through voice, eliminating the need to use icky hands to navigate the interface.

The second element of Sous is the companion app that operates on a phone or tablet. The app is used to display information about the stove or prep environment and guide the user in recipe steps, skills, and techniques.

Illustration of Sous device

Preliminary concept illustration

How it works

Sous is affixed to hood vent, cabinet, wall, in cooking or prep space

Uses camera to enable image processing to glean insights and provide real-time guidance

App can store and search recipes from database or get them from the internet using share functionality

Smartphone and Sous work in sync to provide progress-based instructions

Dynamic voice interface allows user to interact how they want, when they want

System learns user preferences and becomes more accurate

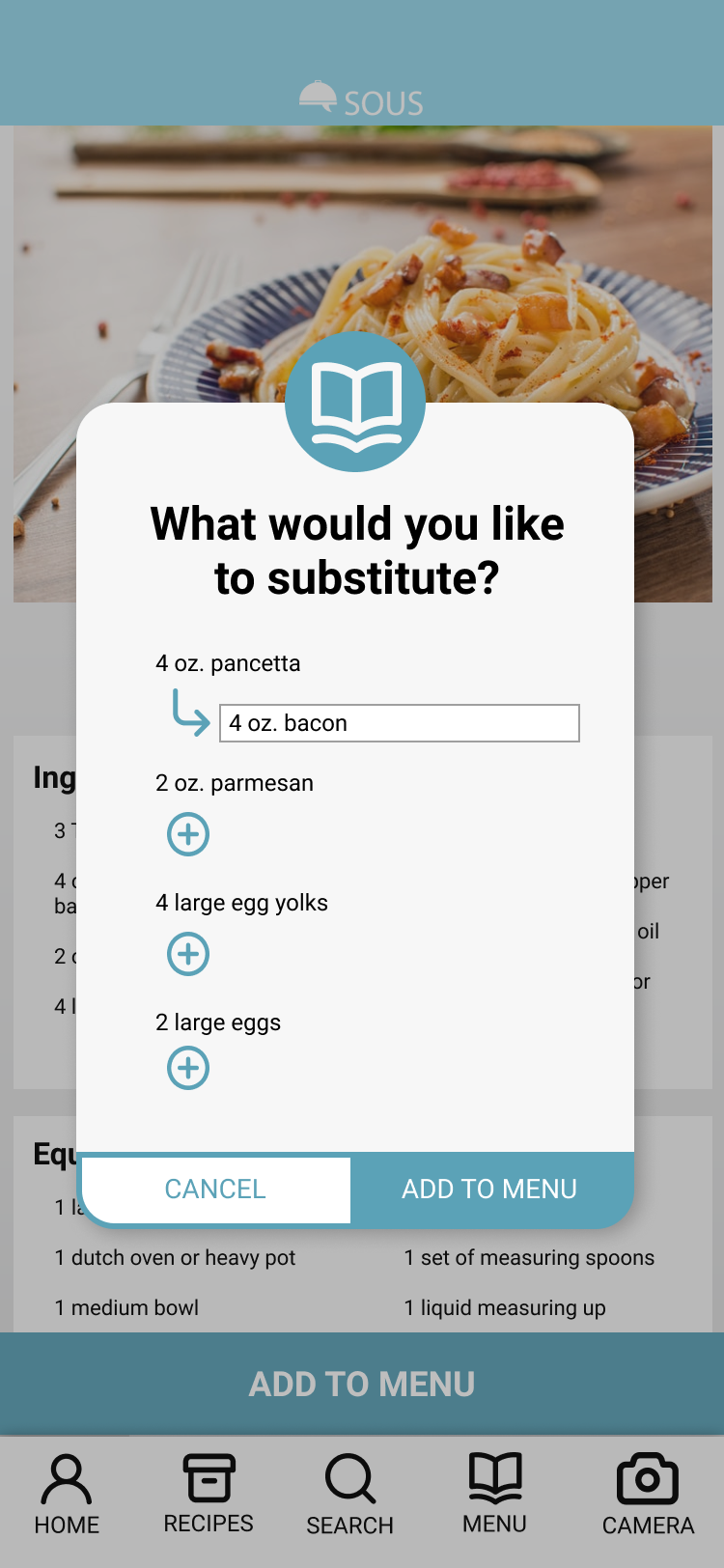

Storyboard exploring a use case for recipe substitution feature

Product Features

Multimodal Interface

Cooking is messy. Sous provides the option for traditional smartphone interaction, voice-only controls, or a combination.

Real-time progress tracking and guidance

Real-time guidance

Sous detects opportunities for technique correction or safety concerns using image recognition and alerts the user. Guidance is customizable based on the user’s preferences.

Context-aware feedback

Sous parses complex questions and provides feedback and suggestions based on what it sees.

Progress-based instructions

Sous uses computer vision to track and guide your progress. Whether you ask Sous for the next step or look at your phone, both will be in sync.

Recipe integration

Users can easily store their favorite recipes on the Sous App by using the device’s native share functionality. Users can also receive guidance on multiple recipes at one time. (For example, when cooking a main and side dish.)

Intelligent, on-demand substitutions

You don’t always have what you need. Sous helps find substitute ingredients that work with the flavor palette of your dish and preferences.

Recommendations based on preferences and habits

Over time, Sous will get to know its users and make recommendations for new recipes to try based on their skill level, preferences, and cooking habits.

Industry-standard recipe storage and search

Offering recipe search within the app will allow continuity within the cooking user journey